AI that sees, understands, and acts: advances at the Robotics Center

Unlike consumer AI, which often focuses on retrospective analysis of big data, CAOR’s work is subject to one key constraint: acting in real time, in continuous interaction with an uncertain environment. Algorithms must be fast, robust, explainable if possible, and capable of functioning with sometimes limited data. This approach informs all of the center’s research, from environmental perception to action planning, including a detailed understanding of human behavior.

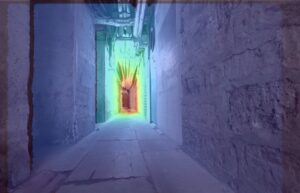

For several years, CAOR researchers have been exploring a counterintuitive idea: perceiving the geometry of a space through sound. The Omni-Batvision project is inspired by the principle of echolocation used by bats. A sound signal is emitted, its echoes are picked up by microphones, and then transformed into spectrograms, visual representations of sound. Thanks to deep learning, these spectrograms can be translated into depth images, revealing the 3D structure of an environment, sometimes even beyond visible obstacles. While sound alone remains less accurate than vision, its fusion with visual data significantly improves overall perception. Ultimately, this work could be used to develop navigation aids for visually impaired people or to enhance the safety of mobile robots in complex environments.

Another original approach is to act not only on the algorithms, but also on the world they observe. In collaboration with Valeo, CAOR is developing intelligent lighting systems that use high-resolution LED headlights. AI is learning to dynamically adapt the light beam to optimize object detection or scene segmentation through computer vision. In concrete terms, the system analyzes an image of the road as it is lit, then proposes a lighting configuration that will maximize the quality of perception. Thanks to learning techniques, light becomes an active parameter of perception, rather than simply illumination.

Vision of an environment from a robot in the Omni-Batvision project

3D perception is at the heart of modern robotics. At CAOR, several projects focus on deep learning applied to 3D point clouds, a graphical representation of data that is generally numerous, derived in particular from lidar sensors (laser remote sensing), a remote measurement technique based on the analysis of the properties of an artificially generated light beam reflected by the target back to its emitter. This data, which is very different from conventional images, requires specific convolution operations. Researchers are therefore developing new methods to automatically distinguish between the ground, walls, objects, and people in a three-dimensional environment. These capabilities are essential for autonomous navigation, for example.

The center is also exploring implicit modeling methods such as Neural Radiance Fields (NeRF), which allows a 3D representation to be constructed from 2D images, and Gaussian Splatting, a 3D rendering technique based on multiple images. Using a set of images and camera positions, these models learn to reconstruct a complete scene and generate new views. A key challenge is computing time, which has long been incompatible with interactive uses. Recent work, particularly in virtual reality rendering, aims to drastically speed up these methods to enable smooth and realistic display. Applications range from heritage digitization to immersive training, robotics, and augmented reality.

Reconstruction of a boat scene based on Neural Radiance Fields (NeRF)

In industrial or craft environments, robots must understand what humans are doing, and sometimes anticipate it in advance, adapting to their pace and spatially to their size. Much of CAOR’s research therefore focuses on recognizing gestures and actions from videos. The systems developed are capable of extracting an operator’s skeleton in real time, identifying their gestures, and understanding the scene as a whole. To overcome the limitations of conventional approaches, researchers are using advanced strategies to learn with little data and adapt to the variability of human gestures. Some work goes further by seeking to predict human trajectories. By analyzing time series of movements and forces, AI can anticipate future trajectories or efforts. This capability paves the way for more fluid collaborative robots, capable of adjusting their behavior even before contact.

Motion training data.

Localization and SLAM (Simultaneous Localization And Mapping) are another historical pillar of CAOR. Researchers combine different sources of information, such as vision, inertia, Wi-Fi, and even the Earth’s magnetic field, to enable robots to accurately locate themselves indoors, where GPS is ineffective.

Based on these perceptions, AI must make decisions. The center develops models capable of predicting the future trajectories of vehicles or users, a key element for safe planning. At the same time, work in reinforcement learning has led to the design of high-performance autopilots, recognized internationally, capable of learning driving strategies from experience.

A key feature of CAOR is the hybridization of physical models and machine learning. Rather than replacing traditional control and estimation approaches, AI enriches them.

Filters enriched by neural networks, or approaches combining physical laws and data, make it possible to obtain systems that are more robust, more interpretable, and often more efficient than purely data-driven solutions. This rigor is crucial for critical applications such as robotics and autonomous vehicles.

This work was highlighted at the AI Workshop held in December 2025 at Mines Paris – PSL. Designed as an internal exchange, the event allowed faculty, doctoral students, and engineers to present their projects, tools, and platforms through oral presentations and posters.

Beyond the diversity of topics, the workshop highlighted a common dynamic: building AI that is grounded in reality, capable of interacting with humans and integrating into complex systems.

Looking ahead, CAOR researchers are interested in integrating large foundation models, a large-scale artificial intelligence model trained on a large amount of data, into robotics. The goal is to develop more general AI capable of understanding new situations and quickly learning new actions.

Through this research, the Mines Paris – PSL Robotics Center is affirming a clear vision: artificial intelligence that does not merely recognize or predict, but perceives, understands, and acts in a variety of practical applications.

Artificial intelligence and computer vision are making great strides, particularly in the ability to generate ultra-realistic 3D images from multiple ...